Introduction

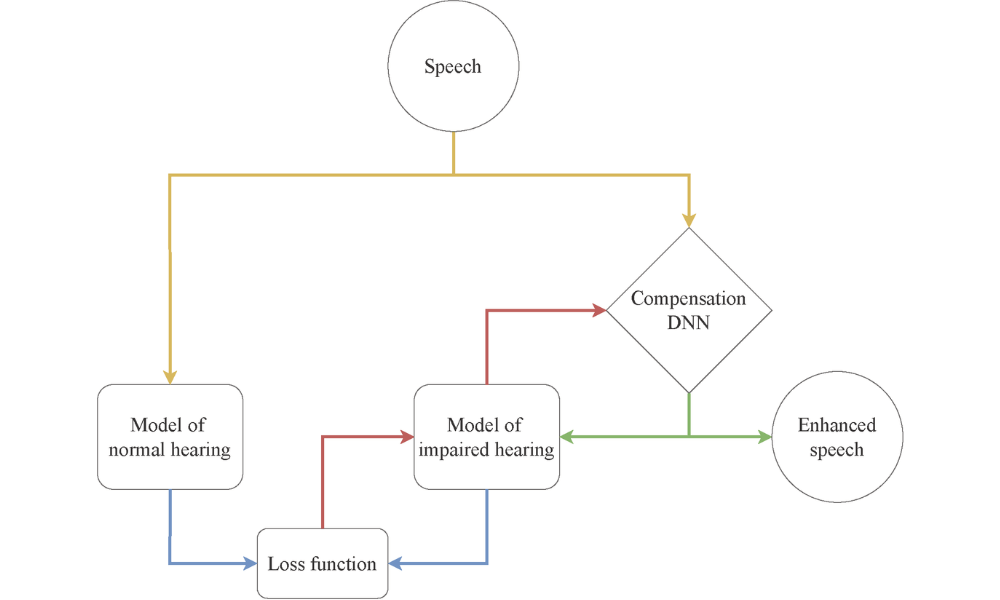

Compensating for hearing loss by providing amplification specific to the hearing loss is the core function of any hearing device. This aims at providing the user with the best possible audibility, loudness, and speech intelligibility. Current algorithms are based on of loudness growth models associated with hearing loss, and do not offer much customisation. The present project takes a new approach by training a deep neural network to perform hearing loss compensation based on a computational model of the impaired inner ear. The deep neural network is then trained by passing many different signals through the computational model.

Funded by Innovationsfonden Danmark.

Aims

The project is high-risk, and therefore the first aim is to verify the feasibility of the basic idea: is hearing loss compensation via auditory models and deep neural networks possible? Furthermore, does it provide any user benefit measured via e.g., speech intelligibility and sound quality. Can it be personalized to match the individual hearing loss better? And lastly, the obtained compensation should be studied with respect to electroacoustic properties and be compared to traditional hearing loss compensation.

Methodology

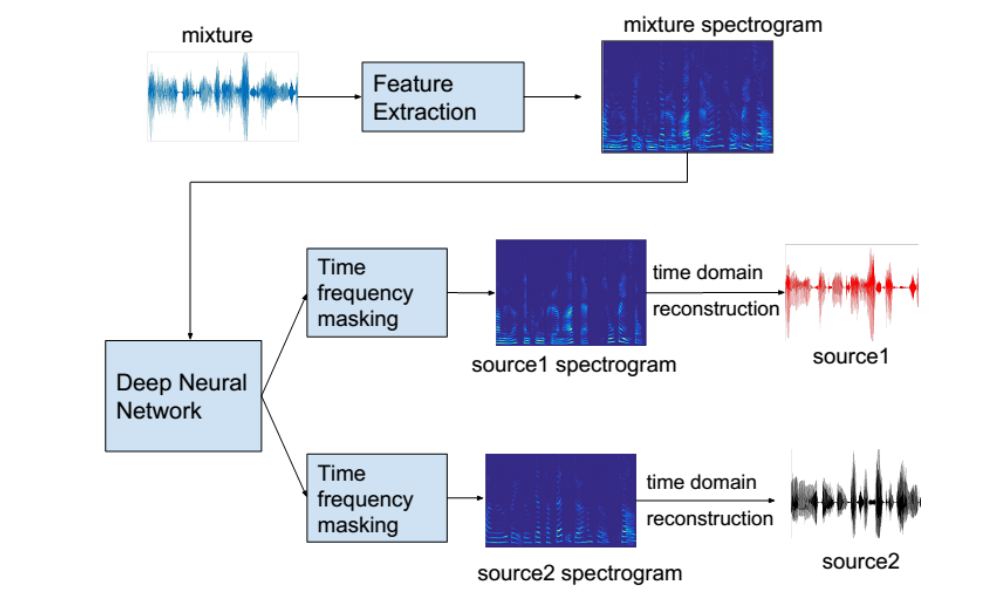

First, the project will investigate a number of published auditory models and find the most relevant model. Then, a suitable deep learning framework will be established, it will then be trained on a variety of hearing losses and sound signals, notably speech in noise.

The trained network will be evaluated by inspecting and measuring the compensated output. When a satisfactory output is obtained, the efficacy of the obtained hearing loss compensation will be assessed by testing speech intelligibility and sound quality on hearing impaired listeners.