Introduction

The healthy ear is fantastic and allows us to focus on particular talkers and messages in even very noisy conditions. With hearing loss, this ability is reduced, and hearing aid users often complain about hearing out particular voices in a crowd or in generally noisy conditions such as party. Deep neural networks (DNN) can be trained to separate voices from other voices or from noise and hence reduce the listening challenges in difficult listening situations. This project investigates the potential benefit for hearing aid users.

Funded by the William Demant Foundation.

Aims

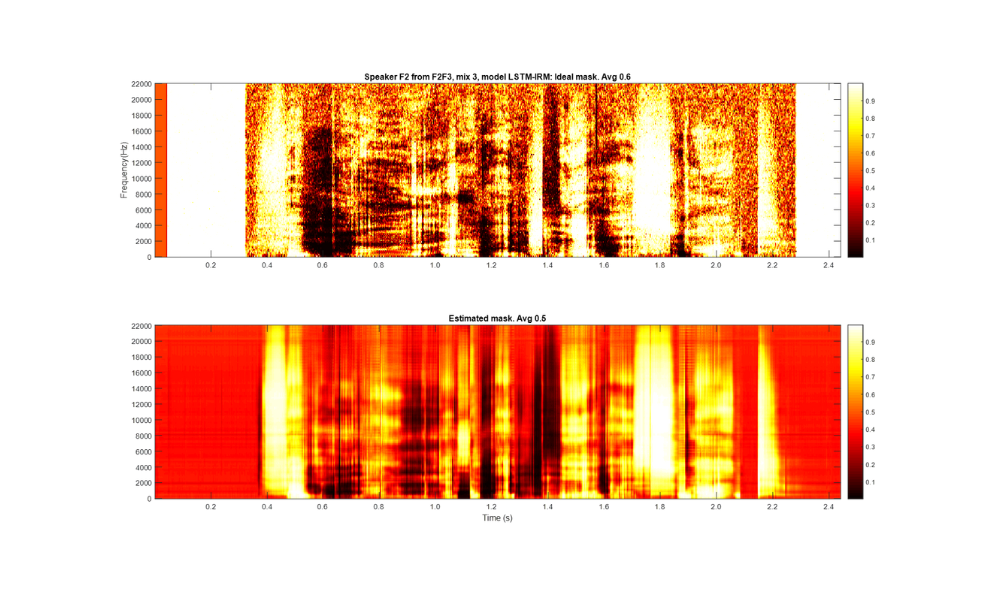

Ideal and estimated ratio mask

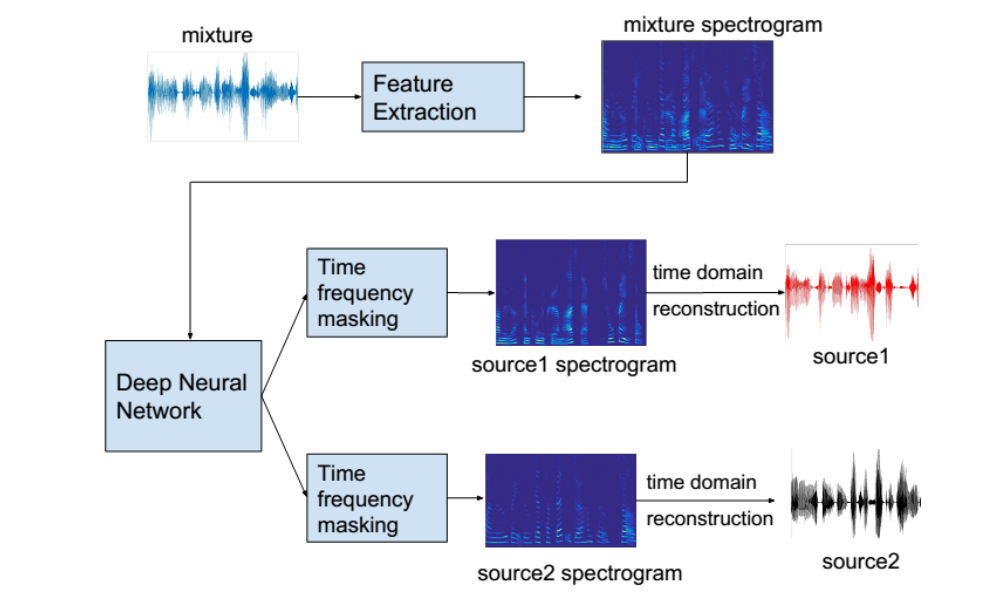

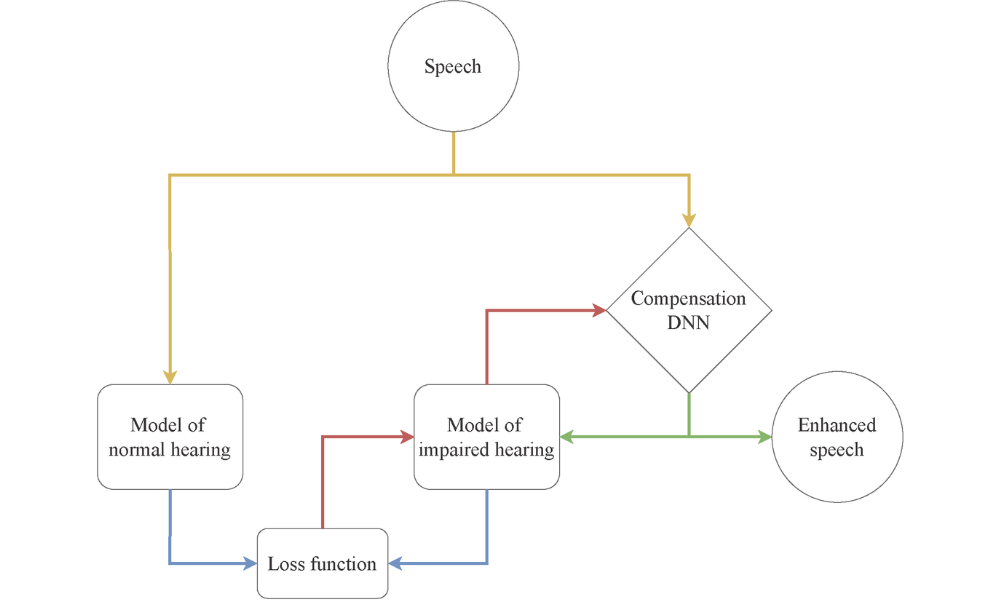

Methodology

After selection of the most relevant scenarios, training materials were assembled from own and public speech and noise corpora and used for training the DNN system. Other excerpts from the same corpora were saved and used for testing the separation performance, first with objective signal measures and afterwards in listening tests with hearing impaired listeners. In the competing-voice scenario, the two separated outputs were fed to the two ears, and in the speech-in-noise scenario, the separated speech was fed to both ears.

Separation demo

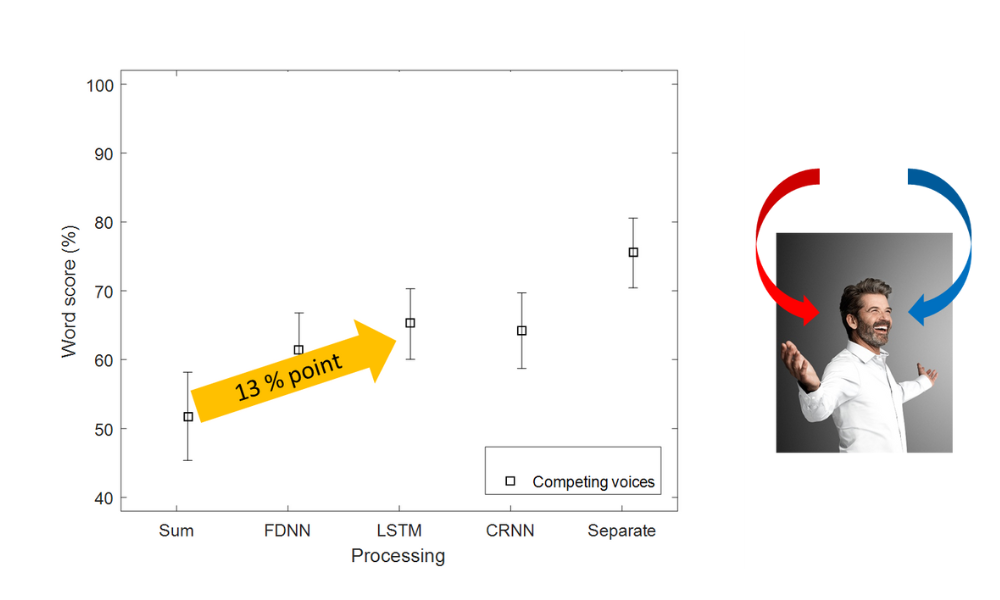

Results

The listening tests documented significant benefit for hearing impaired listeners –

1) in the presence of one competing talker, a 13%-point increase in speech intelligibility was measured when listening to the target and competing voice in each ear

2) in the presence of one competing talker, a 37%-point benefit was registered when listening to the target voice in both ears (from 58% to 95%)

3) a 16%-point benefit was registered when listening to speech separated from party noise