Introduction

When attending speech, a listener’s brain activity tracks the characteristics of the speech signal. The brain activity can be measured using electroencephalography (EEG) and used to predict the listener’s focus of attention in a scenario with multiple talkers. This could alleviate the major problems that individuals with hearing impairment face in multi-talker situations by informing a hearing aid about the attention target to be amplified. It can also be used to assess the efficacy of hearing aids. This project focused on realistic audiovisual speech understanding with competing monologues and dialogues to test if attention can be predicted in realistic settings.

This work has been partially supported by the Swedish Research Council (Vetenskapsrådet, VR 2017-460 06092 418 Mekanismer och behandling vid åldersrelaterad hörselnedsättning).

Aims

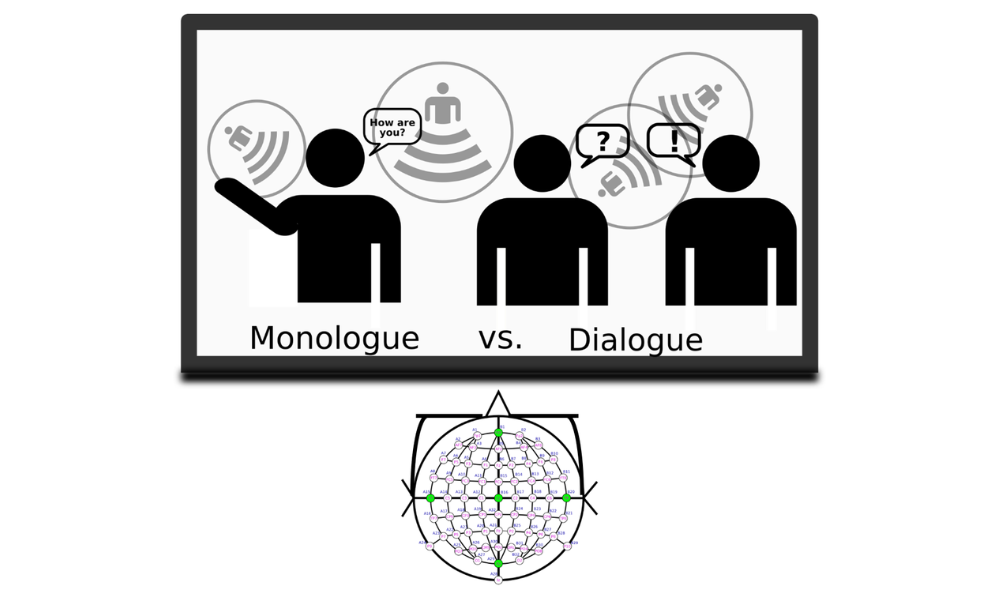

Decoding attention using measures of EEG has potential applications in hearing technology, but the method has so far mostly been evaluated in laboratory settings with two competing monologues. In this project we investigated whether this technique could indeed work in more realistic scenarios with a dialogue and a monologue, presented both acoustically and visually. Eye-gaze measures were considered in addition to EEG due to the audiovisual nature of the task.

Methodology

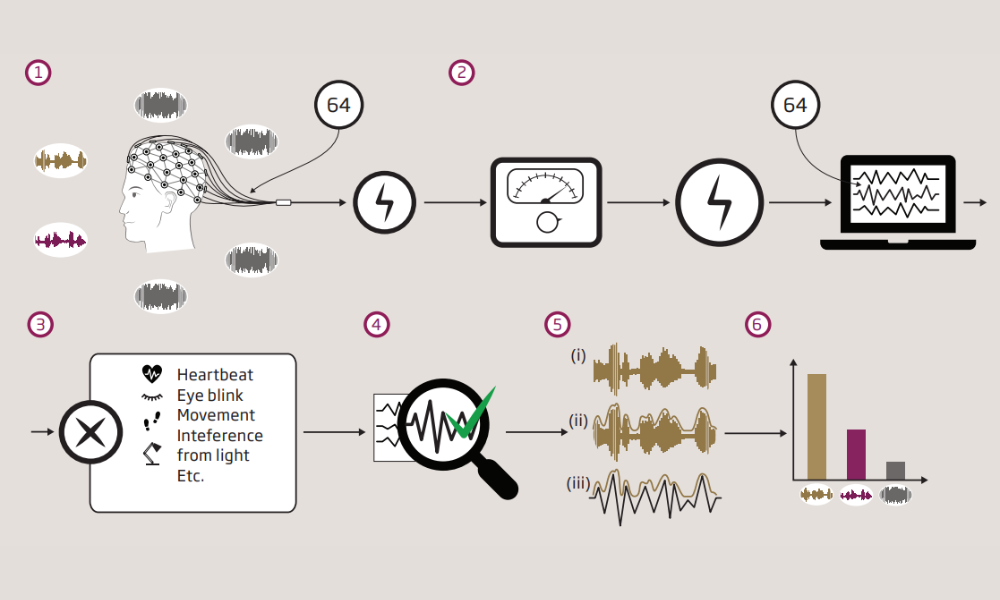

We investigated how normal-hearing participants and hearing-impaired participants with hearing aids use their brains and their eyes to solve an audiovisual speech comprehension task where they attend to either a monologue in the presence of a competing dialogue or to a dialogue in the presence of a competing monologue, both in the presence of additional multi-talker babble noise. During the experiment, the listeners’ brain activity and eye gaze were measured with EEG and eye-tracking glasses, respectively, and their speech comprehension was tested using comprehension questions and subjective ratings of difficulty.

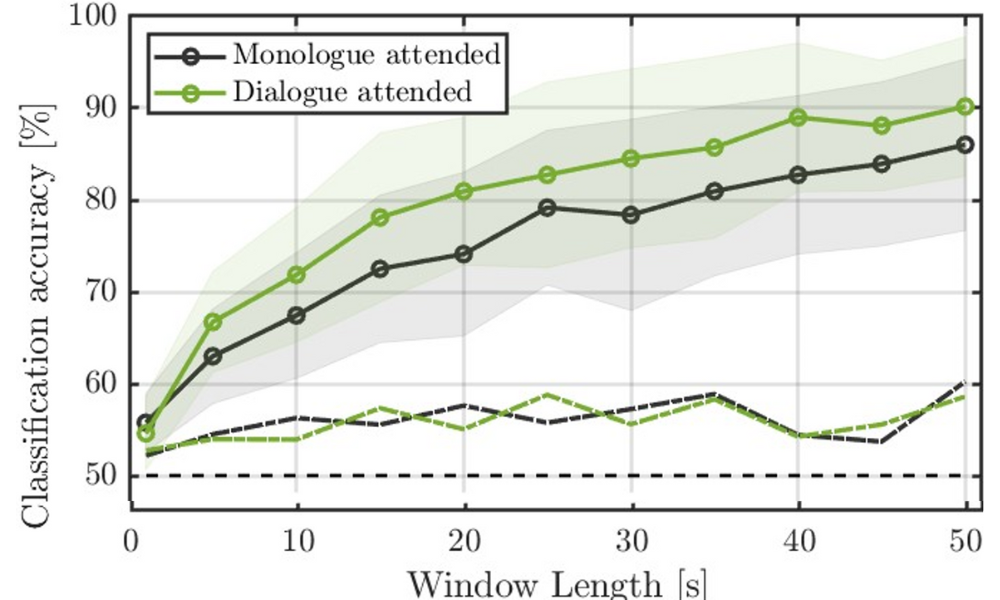

Classification accuracy obtained from normal-hearing listeners’ EEG data as a function of EEG data duration, for monologue attended (black line) and dialogue attended (green line) conditions.

Results

The results obtained with the normal-hearing group indicate that EEG-based attention decoding is indeed possible in an audiovisual monologue-vs-dialogue setting. Although the speech comprehension was similar for the monologue- and the dialogue-attended cases, the dialogues appeared to be more strongly represented in the brain activity than the monologues.

A clear difference was also found in the way that participants used their eyes, either switching between the two dialogue speakers or fixating on the single monologue speaker. Whether these findings also hold for the hearing-impaired group is currently being analyzed.

Eye-gaze behaviour of a normal-hearing listener when attending to an audiovisual dialogue (A) or monologue (B)