Introduction

Children with hearing impairment risk delayed language acquisition, educational achievement, socio-emotional development, and well-being. Current intervention plans fail to prepare those children for academic achievement and social participation in contemporary society where the diversity of their needs is increasing.

Comm4CHILD is an Innovative Training Network (ITN) – a large consortium which addresses the large inter-individual heterogeneity in brain plasticity, cognitive resources, and linguistic abilities, and takes full advantage of this heterogeneity to support developing efficient communicative skills in children with hearing impairment. A group of 15 early-stage researchers (ESRs) will be trained in research and intervention in a cross-sectoral way. Comm4CHILD is a European project under the Marie Skłodowska-Curie grant agreement n°860755 within the European Union’s Horizon 2020 research and innovation program.

Aims

The fifteen individual research projects that form Comm4CHILD are conceptualized within three work packages: biology (i.e., anatomical variations of the cochlea and cerebral functional reorganisation), cognition (i.e., working memory, multimodal integration in communication), and language (i.e., inter-individual differences in speech intelligibility and spelling ability).

The transversal objectives of ESRs are to enhance mapping of the factors underlying heterogeneity; advance the understanding of the predictors of linguistic communicative skills; and develop new intervention methods. A strong focus on training, interactions with stakeholders and dissemination of findings shape this innovative training network.

Methodology

Alina Schulte is a Comm4CHILD PhD student based at Eriksholm who investigates how vibrotactile input on the skin can influence auditory speech perception. Alina has performed a speech test with sentences presented in three conditions: 1) auditory alone, 2) auditory + tactile speech envelope, and 3) auditory + tactile noise. The tactile envelope consisted of low-frequency information delivered through a vibrating probe on the index fingertip.

During a second part of the experiment the test was repeated in a modified version with simultaneous functional-near infrared spectroscopy (fNIRS) recordings to identify cortical activation patterns related to audio-tactile speech processing.

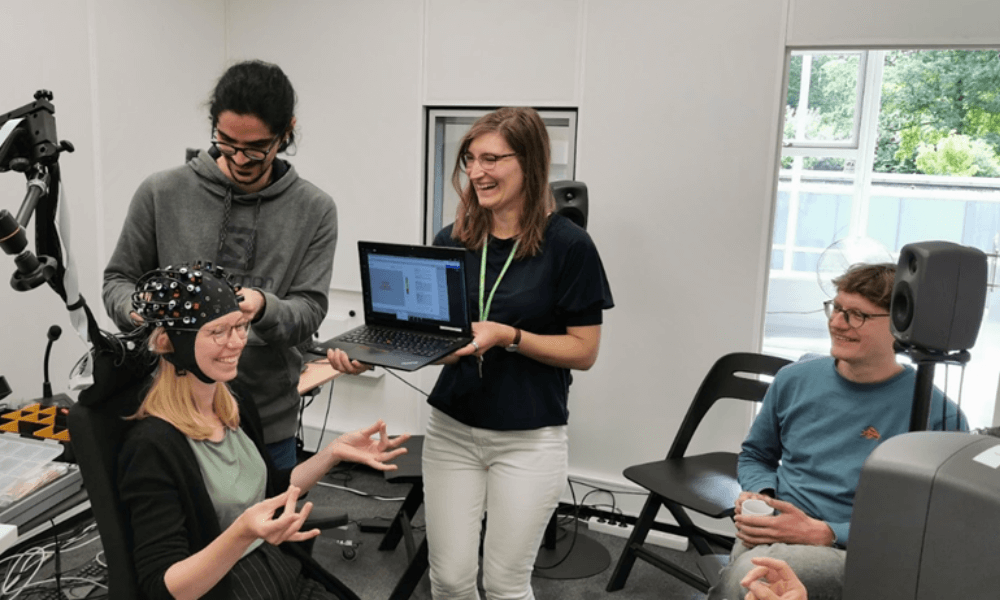

fNIRS system in Pitch Lab at Eriksholm. Alina is wearing an fNIRS cap and performs a fingertapping task, which is a common experiment to test the set-up

Results

Data for 23 normal-hearing people and 14 cochlear implant users was collected. Comparable to previous literature, the results showed a significant improvement in speech understanding scores for sentences presented in the auditory + tactile speech envelope condition compared to the auditory alone condition for both participant groups (5.3% for NH and 5.4% for CI participants).

In line with the principle of inverse effectiveness, we found in the normal-hearing group that this effect was more pronounced with decreasing auditory alone speech intelligibility., reflecting that the brain can benefit most from tactile information when the auditory signal is most degraded.