Introduction

If a hearing aid can decode what sound source the user wishes to attend, it will be possible to control what sounds you want to amplify only by attending to them. The COCOHA project examined how to decode the users’ intent.

COCOHA was an EU Horizon 2020 project running from January 1st 2015 to 31st of December 2018. Read more about COCOHA om the project website: https://cocoha.org/

Aims

The COCOHA project aimed at creating a basis for hearing aids, which can be controlled by the intent of the user. The core idea of the project was based on the work done by James O’Sullivan et. al. in 2015 on auditory attention decoding, where they demonstrated that the sound of an attended speaker gets better encoded in people’s brains than when the speaker is ignored. Additionally, a fallback solution was formulated, where the hearing aid would select the audio stream of visual attention. Hence, two final demonstrators were formulated:

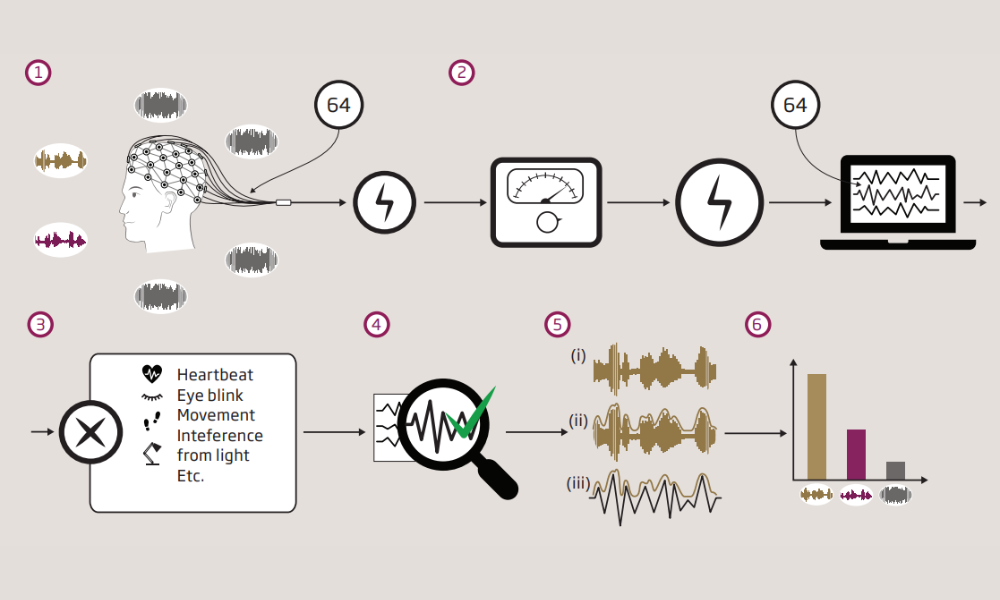

Demonstrator 1: Real-time demonstrator consisting of attention modulation based on brain waves from scalp EEG or Ear-EEG to decode who the attended speaker is and amplify this voice.

Demonstrator 2: Real-time demonstrator consisting of attention modulation based on eye-gaze from Ear-EOG sensors and motion trackers to estimate head orientation, to amplify the voice of the person the subject gazed at.

Methodology

Eriksholm Research Centre was responsible of integrating findings of all work packages in a real-time prototype hearing aid consisting of behind-the-ear shells with microphone, dry electrodes (without amplifiers) and processing unit. Additionally, this work package also developed the eye gaze steering algorithm needed for Demonstrator 2.

Results

Eriksholm contributed to the project by creating a real-time prototype to host both demonstrators as well as by maturing the knowledge about using gaze information to steer hearing devices. In this video you can see how the system works.

Eriksholm also contributed to getting end-user insights on how they perceived the idea behind using eye gaze to steer future hearing devices. Here you can find several testimonial videos from hearing impaired test participants.